使用 Pinata、OpenAI 和 Streamlit 与您的 PDF 聊天

在本教程中,我们将构建一个简单的聊天界面,允许用户上传 PDF,使用OpenAI 的 API检索其内容,并使用Streamlit在类似聊天的界面中显示响应。我们还将利用@pinata上传和存储 PDF 文件。

在继续之前,让我们先来看看我们正在构建的内容:

先决条件:

- Python 基础知识

- Pinata API 密钥(用于上传 PDF)

- OpenAI API 密钥(用于生成响应)

- 已安装 Streamlit(用于构建 UI)

步骤 1:项目设置

首先创建一个新的 Python 项目目录:

mkdir chat-with-pdf

cd chat-with-pdf

python3 -m venv venv

source venv/bin/activate

pip install streamlit openai requests PyPDF2

.env现在,在项目根目录中创建一个文件并添加以下环境变量:

PINATA_API_KEY=<Your Pinata API Key>

PINATA_SECRET_API_KEY=<Your Pinata Secret Key>

OPENAI_API_KEY=<Your OpenAI API Key>

由于需要付费,因此必须OPENAI_API_KEY自行管理。但让我们来了解一下在 Pinita 中创建 api 密钥的过程。

因此,在继续之前,请让我们知道Pinata我们使用它的原因。

Pinata 是一种提供在IPFS(星际文件系统)上存储和管理文件的平台的服务,IPFS 是一个去中心化的分布式文件存储系统。

- 去中心化存储: Pinata 帮助您将文件存储在去中心化网络 IPFS 上。

- 易于使用:它为文件管理提供了用户友好的工具和 API。

- 文件可用性: Pinata 通过将文件“固定”在 IPFS 上来保证您的文件可访问。

- NFT 支持:它非常适合存储 NFT 和 Web3 应用程序的元数据。

- 经济高效: Pinata 可以成为传统云存储的更便宜的替代品。

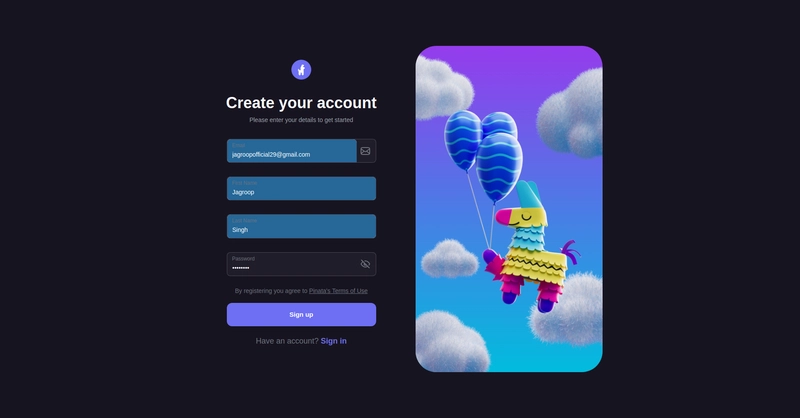

让我们通过登录创建所需的令牌:

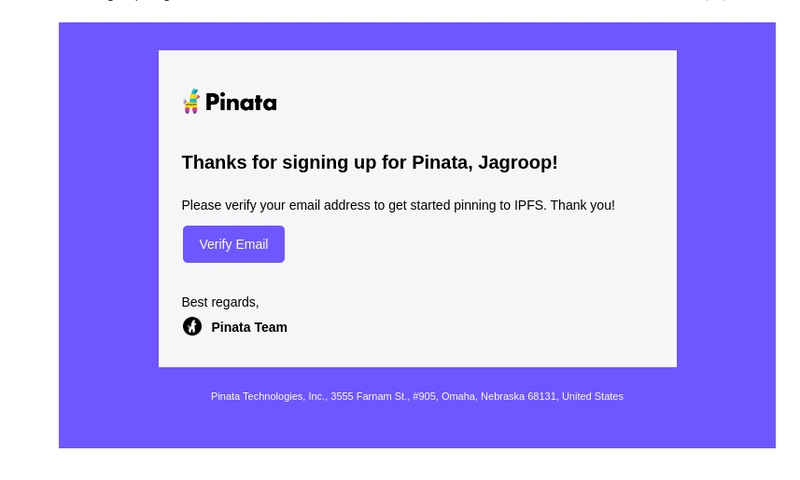

下一步是验证您的注册电子邮件:

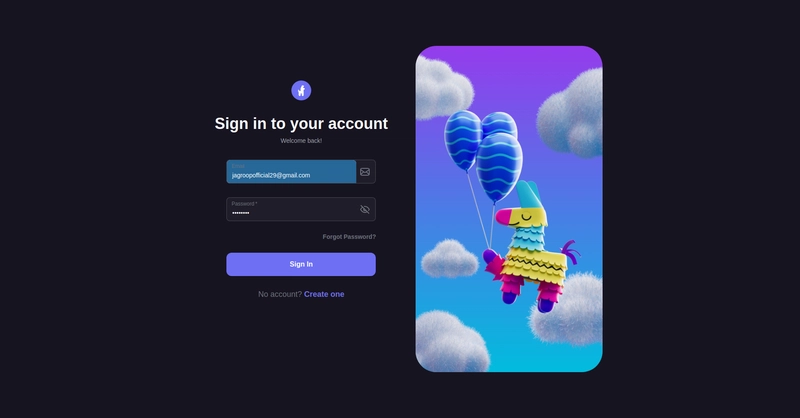

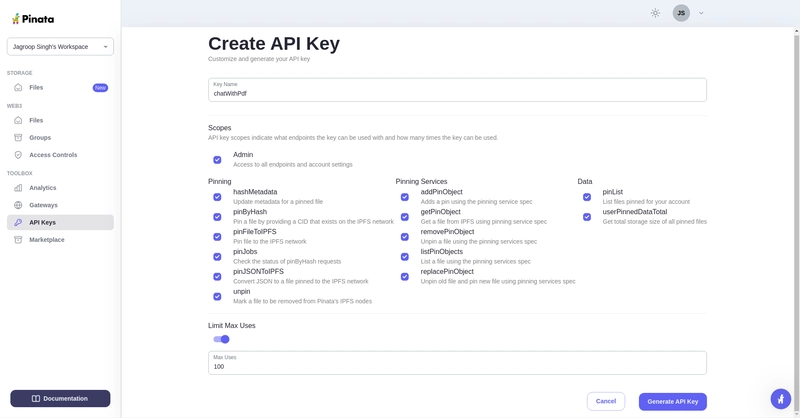

验证登录后生成 api 密钥:

之后,转到 API 密钥部分并创建新的 API 密钥:

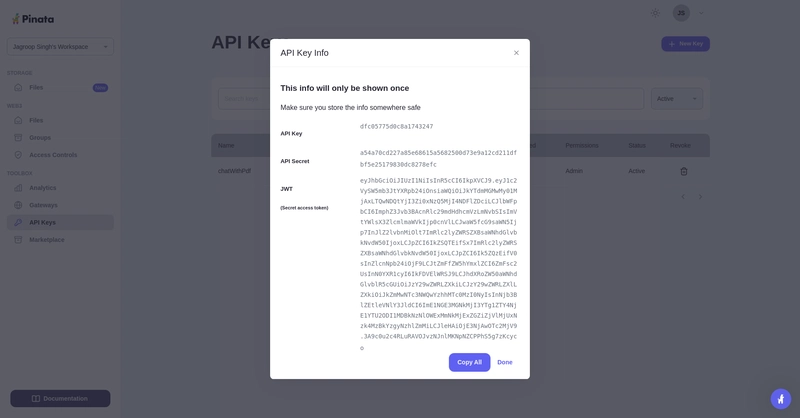

最后,密钥成功生成。请复制该密钥并将其保存在您的代码编辑器中。

OPENAI_API_KEY=<Your OpenAI API Key>

PINATA_API_KEY=dfc05775d0c8a1743247

PINATA_SECRET_API_KEY=a54a70cd227a85e68615a5682500d73e9a12cd211dfbf5e25179830dc8278efc

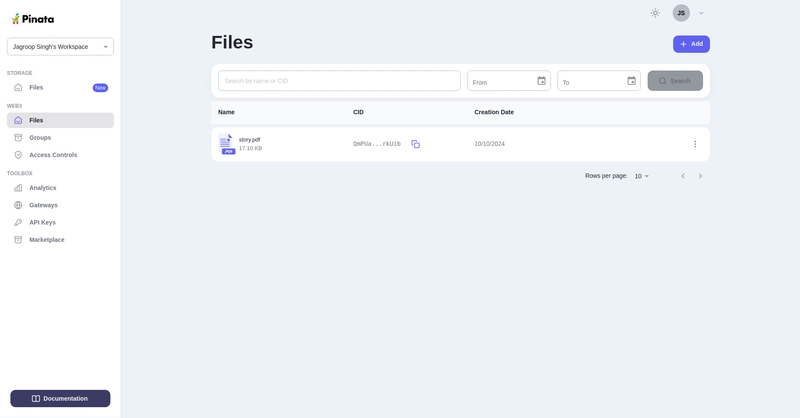

第 2 步:使用 Pinata 上传 PDF

我们将使用 Pinata 的 API 上传 PDF 并获取每个文件的哈希值 (CID)。创建一个名为 的文件pinata_helper.py来处理 PDF 上传。

import os # Import the os module to interact with the operating system

import requests # Import the requests library to make HTTP requests

from dotenv import load_dotenv # Import load_dotenv to load environment variables from a .env file

# Load environment variables from the .env file

load_dotenv()

# Define the Pinata API URL for pinning files to IPFS

PINATA_API_URL = "https://api.pinata.cloud/pinning/pinFileToIPFS"

# Retrieve Pinata API keys from environment variables

PINATA_API_KEY = os.getenv("PINATA_API_KEY")

PINATA_SECRET_API_KEY = os.getenv("PINATA_SECRET_API_KEY")

def upload_pdf_to_pinata(file_path):

"""

Uploads a PDF file to Pinata's IPFS service.

Args:

file_path (str): The path to the PDF file to be uploaded.

Returns:

str: The IPFS hash of the uploaded file if successful, None otherwise.

"""

# Prepare headers for the API request with the Pinata API keys

headers = {

"pinata_api_key": PINATA_API_KEY,

"pinata_secret_api_key": PINATA_SECRET_API_KEY

}

# Open the file in binary read mode

with open(file_path, 'rb') as file:

# Send a POST request to Pinata API to upload the file

response = requests.post(PINATA_API_URL, files={'file': file}, headers=headers)

# Check if the request was successful (status code 200)

if response.status_code == 200:

print("File uploaded successfully") # Print success message

# Return the IPFS hash from the response JSON

return response.json()['IpfsHash']

else:

# Print an error message if the upload failed

print(f"Error: {response.text}")

return None # Return None to indicate failure

步骤 3:设置 OpenAI

接下来,我们将创建一个函数,使用 OpenAI API 与从 PDF 中提取的文本进行交互。我们将利用 OpenAI 的gpt-4o模型gpt-4o-mini进行聊天响应。

创建一个新文件openai_helper.py:

import os

from openai import OpenAI

from dotenv import load_dotenv

# Load environment variables from .env file

load_dotenv()

# Initialize OpenAI client with the API key

OPENAI_API_KEY = os.getenv("OPENAI_API_KEY")

client = OpenAI(api_key=OPENAI_API_KEY)

def get_openai_response(text, pdf_text):

try:

# Create the chat completion request

print("User Input:", text)

print("PDF Content:", pdf_text) # Optional: for debugging

# Combine the user's input and PDF content for context

messages = [

{"role": "system", "content": "You are a helpful assistant for answering questions about the PDF."},

{"role": "user", "content": pdf_text}, # Providing the PDF content

{"role": "user", "content": text} # Providing the user question or request

]

response = client.chat.completions.create(

model="gpt-4", # Use "gpt-4" or "gpt-4o mini" based on your access

messages=messages,

max_tokens=100, # Adjust as necessary

temperature=0.7 # Adjust to control response creativity

)

# Extract the content of the response

return response.choices[0].message.content # Corrected access method

except Exception as e:

return f"Error: {str(e)}"

步骤4:构建Streamlit界面

现在我们已经准备好辅助函数,是时候构建 Streamlit 应用程序了,该应用程序将上传 PDF、从 OpenAI 获取响应并显示聊天。

创建一个名为的文件app.py:

import streamlit as st

import os

import time

from pinata_helper import upload_pdf_to_pinata

from openai_helper import get_openai_response

from PyPDF2 import PdfReader

from dotenv import load_dotenv

# Load environment variables

load_dotenv()

st.set_page_config(page_title="Chat with PDFs", layout="centered")

st.title("Chat with PDFs using OpenAI and Pinata")

uploaded_file = st.file_uploader("Upload your PDF", type="pdf")

# Initialize session state for chat history and loading state

if "chat_history" not in st.session_state:

st.session_state.chat_history = []

if "loading" not in st.session_state:

st.session_state.loading = False

if uploaded_file is not None:

# Save the uploaded file temporarily

file_path = os.path.join("temp", uploaded_file.name)

with open(file_path, "wb") as f:

f.write(uploaded_file.getbuffer())

# Upload PDF to Pinata

st.write("Uploading PDF to Pinata...")

pdf_cid = upload_pdf_to_pinata(file_path)

if pdf_cid:

st.write(f"File uploaded to IPFS with CID: {pdf_cid}")

# Extract PDF content

reader = PdfReader(file_path)

pdf_text = ""

for page in reader.pages:

pdf_text += page.extract_text()

if pdf_text:

st.text_area("PDF Content", pdf_text, height=200)

# Allow user to ask questions about the PDF

user_input = st.text_input("Ask something about the PDF:", disabled=st.session_state.loading)

if st.button("Send", disabled=st.session_state.loading):

if user_input:

# Set loading state to True

st.session_state.loading = True

# Display loading indicator

with st.spinner("AI is thinking..."):

# Simulate loading with sleep (remove in production)

time.sleep(1) # Simulate network delay

# Get AI response

response = get_openai_response(user_input, pdf_text)

# Update chat history

st.session_state.chat_history.append({"user": user_input, "ai": response})

# Clear the input box after sending

st.session_state.input_text = ""

# Reset loading state

st.session_state.loading = False

# Display chat history

if st.session_state.chat_history:

for chat in st.session_state.chat_history:

st.write(f"**You:** {chat['user']}")

st.write(f"**AI:** {chat['ai']}")

# Auto-scroll to the bottom of the chat

st.write("<style>div.stChat {overflow-y: auto;}</style>", unsafe_allow_html=True)

# Add three dots as a loading indicator if still waiting for response

if st.session_state.loading:

st.write("**AI is typing** ...")

else:

st.error("Could not extract text from the PDF.")

else:

st.error("Failed to upload PDF to Pinata.")

步骤5:运行应用程序

要在本地运行应用程序,请使用以下命令:

streamlit run app.py

步骤6:解释代码

皮纳塔上传

- 用户上传一个 PDF 文件,该文件暂时保存在本地,并使用 upload_pdf_to_pinata 函数上传到 Pinata。Pinata 返回一个哈希值(CID),该哈希值代表存储在 IPFS 上的文件。

PDF提取

- 文件上传后,将使用 PyPDF2 提取 PDF 内容。然后,提取的文本将显示在文本区域中。

OpenAI交互

- 用户可以使用文本输入询问有关 PDF 内容的问题。get_openai_response 函数将用户的查询连同 PDF 内容一起发送给 OpenAI,OpenAI 会返回相关的响应。

最终代码可在此 github repo 中找到:

https://github.com/Jagroop2001/chat-with-pdf

这篇博客就到这里!敬请期待更多更新,继续构建精彩的应用!💻✨

祝你编程愉快!😊

后端开发教程 - Java、Spring Boot 实战 - msg200.com

后端开发教程 - Java、Spring Boot 实战 - msg200.com