使用 Terraform、Terragrun 和 GitHub Actions 配置 EKS 集群

随着云原生架构的持续发展,Kubernetes 已成为容器编排的事实标准。Amazon Elastic Kubernetes Service (EKS) 是一项热门的托管 Kubernetes 服务,可简化 AWS 上容器化应用程序的部署和管理。为了简化 EKS 集群的预置流程并实现基础设施管理的自动化,开发人员和 DevOps 团队通常会使用 Terraform、Terragrunt 和 GitHub Actions 等工具。

在本文中,我们将探讨如何无缝集成这些工具以在 AWS 上配置 EKS 集群,深入探讨组合使用它们的好处、所涉及的关键概念以及使用基础设施即代码原则设置 EKS 集群的分步过程。

无论您是开发人员、DevOps 工程师还是基础设施爱好者,本文都将作为一份全面的指南,帮助您利用 Terraform、Terragrun 和 GitHub Actions 的强大功能,高效地配置和管理您的 EKS 集群。

不过,在深入探讨之前,有几点需要注意。

免责声明

a) 鉴于我们将使用 Terraform 和 Terragrunt 来配置我们的基础设施,因此需要熟悉这两者才能跟进。b

) 鉴于我们将使用 GitHub Actions 来自动配置我们的基础设施,因此需要熟悉该工具才能跟进。c

) 对 Docker 和 Kubernetes 容器编排的一些基本了解也有助于跟进。

以下是我们配置 EKS 集群所遵循的步骤:

-

编写用于构建块的 Terraform 代码。

-

编写 Terragrunt 代码来配置基础设施。

-

创建 GitHub Actions 工作流程并将基础设施配置任务委托给它。

-

添加 GitHub Actions 工作流程作业,以便在完成后销毁我们的基础设施。

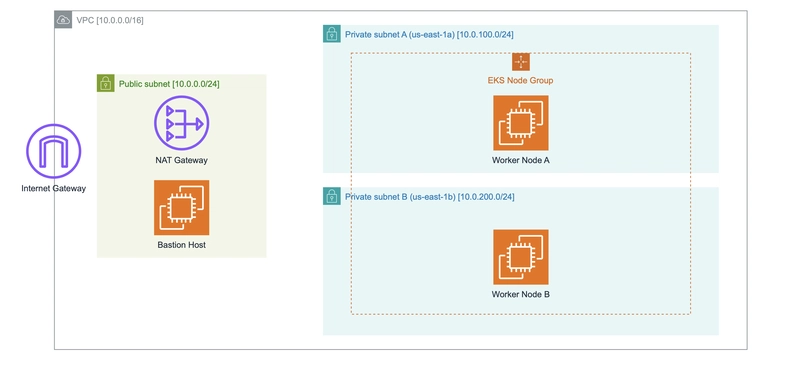

下面是我们将创建的 VPC 及其组件的图表,请记住控制平面组件将部署在 EKS 管理的 VPC 中:

1. 编写用于构建块的 Terraform 代码

每个构建块将包含以下文件:

main.tf

outputs.tf

provider.tf

variables.tf

我们将使用 Terraform 的 AWS 提供程序 4.x 版本,因此所有构建块中的provider.tf文件都将相同:

提供者.tf

terraform {

required_version = ">= 1.4.2"

required_providers {

aws = {

source = "hashicorp/aws"

version = "~> 4.0"

}

}

}

provider "aws" {

access_key = var.AWS_ACCESS_KEY_ID

secret_key = var.AWS_SECRET_ACCESS_KEY

region = var.AWS_REGION

token = var.AWS_SESSION_TOKEN

}

我们可以在这里看到一些变量,这些变量也将由variables.tf文件中的所有构建块使用:

变量.tf

variable "AWS_ACCESS_KEY_ID" {

type = string

}

variable "AWS_SECRET_ACCESS_KEY" {

type = string

}

variable "AWS_SESSION_TOKEN" {

type = string

default = null

}

variable "AWS_REGION" {

type = string

}

因此,在下面定义构建块时,这些变量不会被明确定义,但您应该将它们放在您的variables.tf文件中。

a) VPC 构建块

主文件

resource "aws_vpc" "vpc" {

cidr_block = var.vpc_cidr

instance_tenancy = var.instance_tenancy

enable_dns_support = var.enable_dns_support

enable_dns_hostnames = var.enable_dns_hostnames

assign_generated_ipv6_cidr_block = var.assign_generated_ipv6_cidr_block

tags = merge(var.vpc_tags, {

Name = var.vpc_name

})

}

变量.tf

variable "vpc_cidr" {

type = string

}

variable "vpc_name" {

type = string

}

variable "instance_tenancy" {

type = string

default = "default"

}

variable "enable_dns_support" {

type = bool

default = true

}

variable "enable_dns_hostnames" {

type = bool

}

variable "assign_generated_ipv6_cidr_block" {

type = bool

default = false

}

variable "vpc_tags" {

type = map(string)

}

输出.tf

output "vpc_id" {

value = aws_vpc.vpc.id

}

b) 互联网网关构建块

主文件

resource "aws_internet_gateway" "igw" {

vpc_id = var.vpc_id

tags = merge(var.tags, {

Name = var.name

})

}

变量.tf

variable "vpc_id" {

type = string

}

variable "name" {

type = string

}

variable "tags" {

type = map(string)

}

输出.tf

output "igw_id" {

value = aws_internet_gateway.igw.id

}

c) 路由表构建块

主文件

resource "aws_route_table" "route_tables" {

for_each = { for rt in var.route_tables : rt.name => rt }

vpc_id = each.value.vpc_id

dynamic "route" {

for_each = { for route in each.value.routes : route.cidr_block => route if each.value.is_igw_rt }

content {

cidr_block = route.value.cidr_block

gateway_id = route.value.igw_id

}

}

dynamic "route" {

for_each = { for route in each.value.routes : route.cidr_block => route if !each.value.is_igw_rt }

content {

cidr_block = route.value.cidr_block

nat_gateway_id = route.value.nat_gw_id

}

}

tags = merge(each.value.tags, {

Name = each.value.name

})

}

变量.tf

variable "route_tables" {

type = list(object({

name = string

vpc_id = string

is_igw_rt = bool

routes = list(object({

cidr_block = string

igw_id = optional(string)

nat_gw_id = optional(string)

}))

tags = map(string)

}))

}

输出.tf

output "route_table_ids" {

value = values(aws_route_table.route_tables)[*].id

}

d) 子网构建块

主文件

# Create public subnets

resource "aws_subnet" "public_subnets" {

for_each = { for subnet in var.subnets : subnet.name => subnet if subnet.is_public }

vpc_id = each.value.vpc_id

cidr_block = each.value.cidr_block

availability_zone = each.value.availability_zone

map_public_ip_on_launch = each.value.map_public_ip_on_launch

private_dns_hostname_type_on_launch = each.value.private_dns_hostname_type_on_launch

tags = merge(each.value.tags, {

Name = each.value.name

})

}

# Associate public subnets with their route table

resource "aws_route_table_association" "public_subnets" {

for_each = { for subnet in var.subnets : subnet.name => subnet if subnet.is_public }

subnet_id = aws_subnet.public_subnets[each.value.name].id

route_table_id = each.value.route_table_id

}

# Create private subnets

resource "aws_subnet" "private_subnets" {

for_each = { for subnet in var.subnets : subnet.name => subnet if !subnet.is_public }

vpc_id = each.value.vpc_id

cidr_block = each.value.cidr_block

availability_zone = each.value.availability_zone

private_dns_hostname_type_on_launch = each.value.private_dns_hostname_type_on_launch

tags = merge(each.value.tags, {

Name = each.value.name

})

}

# Associate private subnets with their route table

resource "aws_route_table_association" "private_subnets" {

for_each = { for subnet in var.subnets : subnet.name => subnet if !subnet.is_public }

subnet_id = aws_subnet.private_subnets[each.value.name].id

route_table_id = each.value.route_table_id

}

变量.tf

variable "subnets" {

type = list(object({

name = string

vpc_id = string

cidr_block = string

availability_zone = optional(string)

map_public_ip_on_launch = optional(bool, true)

private_dns_hostname_type_on_launch = optional(string, "resource-name")

is_public = optional(bool, true)

route_table_id = string

tags = map(string)

}))

}

输出.tf

output "public_subnets" {

value = values(aws_subnet.public_subnets)[*].id

}

output "private_subnets" {

value = values(aws_subnet.private_subnets)[*].id

}

e) 弹性 IP 构建块

主文件

resource "aws_eip" "eip" {}

输出.tf

output "eip_id" {

value = aws_eip.eip.allocation_id

}

f) NAT 网关构建块

主文件

resource "aws_nat_gateway" "nat_gw" {

allocation_id = var.eip_id

subnet_id = var.subnet_id

tags = merge(var.tags, {

Name = var.name

})

}

变量.tf

variable "name" {

type = string

}

variable "eip_id" {

type = string

}

variable "subnet_id" {

type = string

description = "The ID of the public subnet in which the NAT Gateway should be placed"

}

variable "tags" {

type = map(string)

}

输出.tf

output "nat_gw_id" {

value = aws_nat_gateway.nat_gw.id

}

g) 氯化钠

主文件

resource "aws_network_acl" "nacls" {

for_each = { for nacl in var.nacls : nacl.name => nacl }

vpc_id = each.value.vpc_id

dynamic "egress" {

for_each = { for rule in each.value.egress : rule.rule_no => rule }

content {

protocol = egress.value.protocol

rule_no = egress.value.rule_no

action = egress.value.action

cidr_block = egress.value.cidr_block

from_port = egress.value.from_port

to_port = egress.value.to_port

}

}

dynamic "ingress" {

for_each = { for rule in each.value.ingress : rule.rule_no => rule }

content {

protocol = ingress.value.protocol

rule_no = ingress.value.rule_no

action = ingress.value.action

cidr_block = ingress.value.cidr_block

from_port = ingress.value.from_port

to_port = ingress.value.to_port

}

}

tags = merge(each.value.tags, {

Name = each.value.name

})

}

resource "aws_network_acl_association" "nacl_associations" {

for_each = { for nacl in var.nacls : "${nacl.name}_${nacl.subnet_id}" => nacl }

network_acl_id = aws_network_acl.nacls[each.value.name].id

subnet_id = each.value.subnet_id

}

变量.tf

variable "nacls" {

type = list(object({

name = string

vpc_id = string

egress = list(object({

protocol = string

rule_no = number

action = string

cidr_block = string

from_port = number

to_port = number

}))

ingress = list(object({

protocol = string

rule_no = number

action = string

cidr_block = string

from_port = number

to_port = number

}))

subnet_id = string

tags = map(string)

}))

}

输出.tf

output "nacls" {

value = values(aws_network_acl.nacls)[*].id

}

output "nacl_associations" {

value = values(aws_network_acl_association.nacl_associations)[*].id

}

h) 安全组构建块

主文件

resource "aws_security_group" "security_group" {

name = var.name

description = var.description

vpc_id = var.vpc_id

# Ingress rules

dynamic "ingress" {

for_each = var.ingress_rules

content {

from_port = ingress.value.from_port

to_port = ingress.value.to_port

protocol = ingress.value.protocol

cidr_blocks = ingress.value.cidr_blocks

}

}

# Egress rules

dynamic "egress" {

for_each = var.egress_rules

content {

from_port = egress.value.from_port

to_port = egress.value.to_port

protocol = egress.value.protocol

cidr_blocks = egress.value.cidr_blocks

}

}

tags = merge(var.tags, {

Name = var.name

})

}

变量.tf

variable "vpc_id" {

type = string

}

variable "name" {

type = string

}

variable "description" {

type = string

}

variable "ingress_rules" {

type = list(object({

protocol = string

from_port = string

to_port = string

cidr_blocks = list(string)

}))

default = []

}

variable "egress_rules" {

type = list(object({

protocol = string

from_port = string

to_port = string

cidr_blocks = list(string)

}))

default = []

}

variable "tags" {

type = map(string)

}

输出.tf

output "security_group_id" {

value = aws_security_group.security_group.id

}

i)EC2 构建块

主文件

# AMI

data "aws_ami" "ami" {

most_recent = var.most_recent_ami

owners = var.owners

filter {

name = var.ami_name_filter

values = var.ami_values_filter

}

}

# EC2 Instance

resource "aws_instance" "ec2_instance" {

ami = data.aws_ami.ami.id

iam_instance_profile = var.use_instance_profile ? var.instance_profile_name : null

instance_type = var.instance_type

subnet_id = var.subnet_id

vpc_security_group_ids = var.existing_security_group_ids

associate_public_ip_address = var.assign_public_ip

key_name = var.uses_ssh ? var.keypair_name : null

user_data = var.use_userdata ? file(var.userdata_script_path) : null

user_data_replace_on_change = var.use_userdata ? var.user_data_replace_on_change : null

tags = merge(

{

Name = var.instance_name

},

var.extra_tags

)

}

变量.tf

variable "most_recent_ami" {

type = bool

}

variable "owners" {

type = list(string)

default = ["amazon"]

}

variable "ami_name_filter" {

type = string

default = "name"

}

variable "ami_values_filter" {

type = list(string)

default = ["al2023-ami-2023.*-x86_64"]

}

variable "use_instance_profile" {

type = bool

default = false

}

variable "instance_profile_name" {

type = string

}

variable "instance_name" {

description = "Name of the instance"

type = string

}

variable "subnet_id" {

description = "ID of the subnet"

type = string

}

variable "instance_type" {

description = "Type of EC2 instance"

type = string

default = "t2.micro"

}

variable "assign_public_ip" {

type = bool

default = true

}

variable "extra_tags" {

description = "Additional tags for EC2 instances"

type = map(string)

default = {}

}

variable "existing_security_group_ids" {

description = "security group IDs for EC2 instances"

type = list(string)

}

variable "uses_ssh" {

type = bool

}

variable "keypair_name" {

type = string

}

variable "use_userdata" {

description = "Whether to use userdata"

type = bool

default = false

}

variable "userdata_script_path" {

description = "Path to the userdata script"

type = string

}

variable "user_data_replace_on_change" {

type = bool

}

输出.tf

output "instance_id" {

value = aws_instance.ec2_instance.id

}

output "instance_arn" {

value = aws_instance.ec2_instance.arn

}

output "instance_private_ip" {

value = aws_instance.ec2_instance.private_ip

}

output "instance_public_ip" {

value = aws_instance.ec2_instance.public_ip

}

output "instance_public_dns" {

value = aws_instance.ec2_instance.public_dns

}

j) IAM 角色构建块

主文件

data "aws_iam_policy_document" "assume_role" {

statement {

effect = "Allow"

dynamic "principals" {

for_each = { for principal in var.principals : principal.type => principal }

content {

type = principals.value.type

identifiers = principals.value.identifiers

}

}

actions = ["sts:AssumeRole"]

dynamic "condition" {

for_each = var.is_external ? [var.condition] : []

content {

test = condition.value.test

variable = condition.value.variable

values = condition.value.values

}

}

}

}

data "aws_iam_policy_document" "policy_document" {

dynamic "statement" {

for_each = { for statement in var.policy_statements : statement.sid => statement }

content {

effect = "Allow"

actions = statement.value.actions

resources = statement.value.resources

dynamic "condition" {

for_each = statement.value.has_condition ? [statement.value.condition] : []

content {

test = condition.value.test

variable = condition.value.variable

values = condition.value.values

}

}

}

}

}

resource "aws_iam_role" "role" {

name = var.role_name

assume_role_policy = data.aws_iam_policy_document.assume_role.json

}

resource "aws_iam_role_policy" "policy" {

count = length(var.policy_statements) > 0 && var.policy_name != "" ? 1 : 0

name = var.policy_name

role = aws_iam_role.role.id

policy = data.aws_iam_policy_document.policy_document.json

}

resource "aws_iam_role_policy_attachment" "attachment" {

for_each = { for attachment in var.policy_attachments : attachment.arn => attachment }

policy_arn = each.value.arn

role = aws_iam_role.role.name

}

变量.tf

variable "principals" {

type = list(object({

type = string

identifiers = list(string)

}))

}

variable "is_external" {

type = bool

default = false

}

variable "condition" {

type = object({

test = string

variable = string

values = list(string)

})

default = {

test = "test"

variable = "variable"

values = ["values"]

}

}

variable "role_name" {

type = string

}

variable "policy_name" {

type = string

}

variable "policy_attachments" {

type = list(object({

arn = string

}))

default = []

}

variable "policy_statements" {

type = list(object({

sid = string

actions = list(string)

resources = list(string)

has_condition = optional(bool, false)

condition = optional(object({

test = string

variable = string

values = list(string)

}))

}))

default = [

{

sid = "CloudWatchLogsPermissions"

actions = [

"logs:CreateLogGroup",

"logs:CreateLogStream",

"logs:DescribeLogGroups",

"logs:DescribeLogStreams",

"logs:PutLogEvents",

"logs:GetLogEvents",

"logs:FilterLogEvents",

],

resources = ["*"]

}

]

}

输出.tf

output "role_arn" {

value = aws_iam_role.role.arn

}

output "role_name" {

value = aws_iam_role.role.name

}

output "unique_id" {

value = aws_iam_role.role.unique_id

}

k) 实例配置文件构建块

主文件

# Instance Profile

resource "aws_iam_instance_profile" "instance_profile" {

name = var.instance_profile_name

path = var.path

role = var.iam_role_name

tags = merge(var.instance_profile_tags, {

Name = var.instance_profile_name

})

}

变量.tf

variable "instance_profile_name" {

type = string

description = "(Optional, Forces new resource) Name of the instance profile. If omitted, Terraform will assign a random, unique name. Conflicts with name_prefix. Can be a string of characters consisting of upper and lowercase alphanumeric characters and these special characters: _, +, =, ,, ., @, -. Spaces are not allowed."

}

variable "iam_role_name" {

type = string

description = "(Optional) Name of the role to add to the profile."

}

variable "path" {

type = string

default = "/"

description = "(Optional, default ' / ') Path to the instance profile. For more information about paths, see IAM Identifiers in the IAM User Guide. Can be a string of characters consisting of either a forward slash (/) by itself or a string that must begin and end with forward slashes. Can include any ASCII character from the ! (\u0021) through the DEL character (\u007F), including most punctuation characters, digits, and upper and lowercase letters."

}

variable "instance_profile_tags" {

type = map(string)

}

输出.tf

output "arn" {

value = aws_iam_instance_profile.instance_profile.arn

}

output "name" {

value = aws_iam_instance_profile.instance_profile.name

}

output "id" {

value = aws_iam_instance_profile.instance_profile.id

}

output "unique_id" {

value = aws_iam_instance_profile.instance_profile.unique_id

}

l) EKS 集群构建块

主文件

# EKS Cluster

resource "aws_eks_cluster" "cluster" {

name = var.name

enabled_cluster_log_types = var.enabled_cluster_log_types

role_arn = var.cluster_role_arn

version = var.cluster_version

vpc_config {

subnet_ids = var.subnet_ids

}

}

变量.tf

variable "name" {

type = string

description = "(Required) Name of the cluster. Must be between 1-100 characters in length. Must begin with an alphanumeric character, and must only contain alphanumeric characters, dashes and underscores (^[0-9A-Za-z][A-Za-z0-9\\-_]+$)."

}

variable "enabled_cluster_log_types" {

type = list(string)

description = "(Optional) List of the desired control plane logging to enable."

default = []

}

variable "cluster_role_arn" {

type = string

description = "(Required) ARN of the IAM role that provides permissions for the Kubernetes control plane to make calls to AWS API operations on your behalf."

}

variable "subnet_ids" {

type = list(string)

description = "(Required) List of subnet IDs. Must be in at least two different availability zones. Amazon EKS creates cross-account elastic network interfaces in these subnets to allow communication between your worker nodes and the Kubernetes control plane."

}

variable "cluster_version" {

type = string

description = "(Optional) Desired Kubernetes master version. If you do not specify a value, the latest available version at resource creation is used and no upgrades will occur except those automatically triggered by EKS. The value must be configured and increased to upgrade the version when desired. Downgrades are not supported by EKS."

default = null

}

输出.tf

output "arn" {

value = aws_eks_cluster.cluster.arn

}

output "endpoint" {

value = aws_eks_cluster.cluster.endpoint

}

output "id" {

value = aws_eks_cluster.cluster.id

}

output "kubeconfig-certificate-authority-data" {

value = aws_eks_cluster.cluster.certificate_authority[0].data

}

output "name" {

value = aws_eks_cluster.cluster.name

}

output "oidc_tls_issuer" {

value = aws_eks_cluster.cluster.identity[0].oidc[0].issuer

}

output "version" {

value = aws_eks_cluster.cluster.version

}

m) EKS 附加组件构建块

主文件

# EKS Add-On

resource "aws_eks_addon" "addon" {

for_each = { for addon in var.addons : addon.name => addon }

cluster_name = var.cluster_name

addon_name = each.value.name

addon_version = each.value.version

}

变量.tf

variable "addons" {

type = list(object({

name = string

version = string

}))

description = "(Required) Name of the EKS add-on."

}

variable "cluster_name" {

type = string

description = "(Required) Name of the EKS Cluster. Must be between 1-100 characters in length. Must begin with an alphanumeric character, and must only contain alphanumeric characters, dashes and underscores (^[0-9A-Za-z][A-Za-z0-9\\-_]+$)."

}

输出.tf

output "arns" {

value = values(aws_eks_addon.addon)[*].arn

}

n) EKS 节点组构建块

主文件

# EKS node group

resource "aws_eks_node_group" "node_group" {

cluster_name = var.cluster_name

node_group_name = var.node_group_name

node_role_arn = var.node_role_arn

subnet_ids = var.subnet_ids

version = var.cluster_version

ami_type = var.ami_type

capacity_type = var.capacity_type

disk_size = var.disk_size

instance_types = var.instance_types

scaling_config {

desired_size = var.scaling_config.desired_size

max_size = var.scaling_config.max_size

min_size = var.scaling_config.min_size

}

update_config {

max_unavailable = var.update_config.max_unavailable

max_unavailable_percentage = var.update_config.max_unavailable_percentage

}

}

变量.tf

variable "cluster_name" {

type = string

description = "(Required) Name of the EKS Cluster. Must be between 1-100 characters in length. Must begin with an alphanumeric character, and must only contain alphanumeric characters, dashes and underscores (^[0-9A-Za-z][A-Za-z0-9\\-_]+$)."

}

variable "node_group_name" {

type = string

description = "(Optional) Name of the EKS Node Group. If omitted, Terraform will assign a random, unique name. Conflicts with node_group_name_prefix. The node group name can't be longer than 63 characters. It must start with a letter or digit, but can also include hyphens and underscores for the remaining characters."

}

variable "node_role_arn" {

type = string

description = "(Required) Amazon Resource Name (ARN) of the IAM Role that provides permissions for the EKS Node Group."

}

variable "scaling_config" {

type = object({

desired_size = number

max_size = number

min_size = number

})

default = {

desired_size = 1

max_size = 1

min_size = 1

}

description = "(Required) Configuration block with scaling settings."

}

variable "subnet_ids" {

type = list(string)

description = "(Required) Identifiers of EC2 Subnets to associate with the EKS Node Group. These subnets must have the following resource tag: kubernetes.io/cluster/CLUSTER_NAME (where CLUSTER_NAME is replaced with the name of the EKS Cluster)."

}

variable "update_config" {

type = object({

max_unavailable_percentage = optional(number)

max_unavailable = optional(number)

})

}

variable "cluster_version" {

type = string

description = "(Optional) Kubernetes version. Defaults to EKS Cluster Kubernetes version. Terraform will only perform drift detection if a configuration value is provided."

default = null

}

variable "ami_type" {

type = string

description = "(Optional) Type of Amazon Machine Image (AMI) associated with the EKS Node Group. Valid values are: AL2_x86_64 | AL2_x86_64_GPU | AL2_ARM_64 | CUSTOM | BOTTLEROCKET_ARM_64 | BOTTLEROCKET_x86_64 | BOTTLEROCKET_ARM_64_NVIDIA | BOTTLEROCKET_x86_64_NVIDIA | WINDOWS_CORE_2019_x86_64 | WINDOWS_FULL_2019_x86_64 | WINDOWS_CORE_2022_x86_64 | WINDOWS_FULL_2022_x86_64 | AL2023_x86_64_STANDARD | AL2023_ARM_64_STANDARD"

default = "AL2023_x86_64_STANDARD"

}

variable "capacity_type" {

type = string

description = "(Optional) Type of capacity associated with the EKS Node Group. Valid values: ON_DEMAND, SPOT."

default = "ON_DEMAND"

}

variable "disk_size" {

type = number

description = "(Optional) Disk size in GiB for worker nodes. Defaults to 20."

default = 20

}

variable "instance_types" {

type = list(string)

description = "(Required) Set of instance types associated with the EKS Node Group. Defaults to [\"t3.medium\"]."

default = ["t3.medium"]

}

输出.tf

output "arn" {

value = aws_eks_node_group.node_group.arn

}

o)IAM OIDC 构建块(允许 pod 承担 IAM 角色)

主文件

data "tls_certificate" "tls" {

url = var.oidc_issuer

}

resource "aws_iam_openid_connect_provider" "provider" {

client_id_list = var.client_id_list

thumbprint_list = data.tls_certificate.tls.certificates[*].sha1_fingerprint

url = data.tls_certificate.tls.url

}

data "aws_iam_policy_document" "assume_role_policy" {

statement {

actions = ["sts:AssumeRoleWithWebIdentity"]

effect = "Allow"

condition {

test = "StringEquals"

variable = "${replace(aws_iam_openid_connect_provider.provider.url, "https://", "")}:sub"

values = ["system:serviceaccount:kube-system:aws-node"]

}

principals {

identifiers = [aws_iam_openid_connect_provider.provider.arn]

type = "Federated"

}

}

}

resource "aws_iam_role" "role" {

assume_role_policy = data.aws_iam_policy_document.assume_role_policy.json

name = var.role_name

}

变量.tf

variable "role_name" {

type = string

description = "(Required) Name of the IAM role."

}

variable "client_id_list" {

type = list(string)

default = ["sts.amazonaws.com"]

}

variable "oidc_issuer" {

type = string

}

输出.tf

output "provider_arn" {

value = aws_iam_openid_connect_provider.provider.arn

}

output "provider_id" {

value = aws_iam_openid_connect_provider.provider.id

}

output "provider_url" {

value = aws_iam_openid_connect_provider.provider.url

}

output "role_arn" {

value = aws_iam_role.role.arn

}

定义构建块后,我们现在可以将它们版本化到 GitHub 存储库中,并在下一步中使用它们来开发我们的 Terragrunt 代码。

2. 编写 Terragrunt 代码来配置基础设施

我们的 Terragrunt 代码将具有以下目录结构:

infra-live/

<environment>/

<module_1>/

terragrunt.hcl

<module_2>/

terragrunt.hcl

...

<module_n>/

terragrunt.hcl

terragrunt.hcl

在本篇文章中,我们只有一个 dev 目录。该目录将包含代表我们想要创建的不同特定资源的目录。

我们的最终文件夹结构将是:

infra-live/

dev/

bastion-ec2/

terragrunt.hcl

user-data.sh

bastion-instance-profile/

terragrunt.hcl

bastion-role/

terragrunt.hcl

eks-addons/

terragrunt.hcl

eks-cluster/

terragrunt.hcl

eks-cluster-role/

terragrunt.hcl

eks-node-group/

terragrunt.hcl

eks-pod-iam/

terragrunt.hcl

internet-gateway/

terragrunt.hcl

nacl/

terragrunt.hcl

nat-gateway/

terragrunt.hcl

nat-gw-eip/

terragrunt.hcl

private-route-table/

terragrunt.hcl

private-subnets/

terragrunt.hcl

public-route-table/

terragrunt.hcl

public-subnets/

terragrunt.hcl

security-group/

terragrunt.hcl

vpc/

terragrunt.hcl

worker-node-role/

terragrunt.hcl

.gitignore

terragrunt.hcl

a) infra-live/terragrunt.hcl

我们的根terragrunt.hcl文件将包含远程 Terraform 状态的配置。我们将使用 AWS 中的 S3 存储桶来存储 Terraform 状态文件,并且 S3 存储桶的名称必须是唯一的才能成功创建。在应用任何 terragrunt 配置之前,必须先创建此存储桶。我的 S3 存储桶位于北弗吉尼亚地区 (us-east-1)。

generate "backend" {

path = "backend.tf"

if_exists = "overwrite_terragrunt"

contents = <<EOF

terraform {

backend "s3" {

bucket = "<s3_bucket_name>"

key = "infra-live/${path_relative_to_include()}/terraform.tfstate"

region = "us-east-1"

encrypt = true

}

}

EOF

}

确保更换使用您自己的 S3 存储桶的名称。

b)infra-live/dev/vpc/terragrunt.hcl

此模块使用VPC 构建块来创建我们的 VPC。

我们的 VPC CIDR 将是10.0.0.0/16。

include "root" {

path = find_in_parent_folders()

}

terraform {

source = "git@github.com:<name_or_org>/vpc.git"

}

inputs = {

vpc_cidr = "10.0.0.0/16"

vpc_name = "eks-demo-vpc"

enable_dns_hostnames = true

vpc_tags = {}

}

输入部分传递的值是在构建块中定义的变量。

对于此模块和后续模块,我们不会传递变量AWS_ACCESS_KEY_ID、AWS_SECRET_ACCESS_KEY和AWS_REGION,因为这些凭证(除AWS_REGION变量外)是敏感的。您必须将它们作为机密添加到您将创建的 GitHub 存储库中,以对 Terragrunt 代码进行版本控制。

c) infra-live/dev/internet-gateway/terragrunt.hcl

该模块使用Internet Gateway 构建块作为其 Terraform 源来创建我们的 VPC 的互联网网关。

include "root" {

path = find_in_parent_folders()

}

terraform {

source = "git@github.com:<name_or_org>/internet-gateway.git"

}

dependency "vpc" {

config_path = "../vpc"

}

inputs = {

vpc_id = dependency.vpc.outputs.vpc_id

name = "eks-demo-igw"

tags = {}

}

d)infra-live/dev/public-route-table/terragrunt.hcl

该模块使用路由表构建块作为其 Terraform 源来创建我们的 VPC 的公共路由表,以便与我们接下来要创建的公共子网相关联。

它还添加了一条路由,将所有互联网流量引导至互联网网关。

include "root" {

path = find_in_parent_folders()

}

terraform {

source = "git@github.com:<name_or_org>/route-table.git"

}

dependency "vpc" {

config_path = "../vpc"

}

dependency "igw" {

config_path = "../internet-gateway"

}

inputs = {

route_tables = [

{

name = "eks-demo-public-rt"

vpc_id = dependency.vpc.outputs.vpc_id

is_igw_rt = true

routes = [

{

cidr_block = "0.0.0.0/0"

igw_id = dependency.igw.outputs.igw_id

}

]

tags = {}

}

]

}

e)infra-live/dev/public-subnets/terragrunt.hcl

该模块使用子网构建块作为其 Terraform 源来创建我们的 VPC 的公共子网并将其与公共路由表关联。

公共子网的 CIDR 将为 10.0.0.0/24。

include "root" {

path = find_in_parent_folders()

}

terraform {

source = "git@github.com:<name_or_org>/subnet.git"

}

dependency "vpc" {

config_path = "../vpc"

}

dependency "public-route-table" {

config_path = "../public-route-table"

}

inputs = {

subnets = [

{

name = "eks-demo-public-subnet"

vpc_id = dependency.vpc.outputs.vpc_id

cidr_block = "10.0.0.0/24"

availability_zone = "us-east-1a"

map_public_ip_on_launch = true

private_dns_hostname_type_on_launch = "resource-name"

is_public = true

route_table_id = dependency.public-route-table.outputs.route_table_ids[0]

tags = {}

},

{

name = "eks-demo-rds-subnet-a"

vpc_id = dependency.vpc.outputs.vpc_id

cidr_block = "10.0.1.0/24"

availability_zone = "us-east-1a"

map_public_ip_on_launch = true

private_dns_hostname_type_on_launch = "resource-name"

is_public = true

route_table_id = dependency.public-route-table.outputs.route_table_ids[0]

tags = {}

},

{

name = "eks-demo-rds-subnet-b"

vpc_id = dependency.vpc.outputs.vpc_id

cidr_block = "10.0.2.0/24"

availability_zone = "us-east-1b"

map_public_ip_on_launch = true

private_dns_hostname_type_on_launch = "resource-name"

is_public = true

route_table_id = dependency.public-route-table.outputs.route_table_ids[0]

tags = {}

}

]

}

f) infra-live/dev/nat-gw-eip/terragrunt.hcl

该模块使用弹性 IP 构建块作为其 Terraform 源在我们的 VPC 中创建一个静态 IP,我们将把它与我们接下来创建的 NAT 网关关联起来。

include "root" {

path = find_in_parent_folders()

}

terraform {

source = "git@github.com:<name_or_org>/eip.git"

}

dependency "vpc" {

config_path = "../vpc"

}

inputs = {}

g) infra-live/dev/nat-gateway/terragrunt.hcl

此模块使用NAT 网关构建块作为其 Terraform 来创建一个 NAT 网关,我们将该网关放置在 VPC 的公共子网中。它将连接之前创建的弹性 IP。

include "root" {

path = find_in_parent_folders()

}

terraform {

source = "git@github.com:<name_or_org>/nat-gateway.git"

}

dependency "eip" {

config_path = "../nat-gw-eip"

}

dependency "public-subnets" {

config_path = "../public-subnets"

}

inputs = {

eip_id = dependency.eip.outputs.eip_id

subnet_id = dependency.public-subnets.outputs.public_subnets[0]

name = "eks-demo-nat-gw"

tags = {}

}

h) infra-live/dev/private-route-table/terragrunt.hcl

该模块使用路由表构建块作为其 Terraform 源来创建我们的 VPC 的私有路由表,以便与我们接下来要创建的私有子网相关联。

它还添加了一条路由,将所有互联网流量引导至 NAT 网关。

include "root" {

path = find_in_parent_folders()

}

terraform {

source = "git@github.com:<name_or_org>/route-table.git"

}

dependency "vpc" {

config_path = "../vpc"

}

dependency "nat-gw" {

config_path = "../nat-gateway"

}

inputs = {

route_tables = [

{

name = "eks-demo-private-rt"

vpc_id = dependency.vpc.outputs.vpc_id

is_igw_rt = false

routes = [

{

cidr_block = "0.0.0.0/0"

nat_gw_id = dependency.nat-gw.outputs.nat_gw_id

}

]

tags = {}

}

]

}

i)infra-live/dev/private-subnets/terragrunt.hcl

该模块使用子网构建块作为其 Terraform 源来创建我们的 VPC 的私有子网并将它们与私有路由表关联。

应用程序私有子网的 CIDR 将为 10.0.100.0/24 (us-east-1a) 和 10.0.200.0/24 (us-east-1b),数据库私有子网的 CIDR 将为 10.0.10.0/24 (us-east-1a) 和 10.0.20.0/24 (us-east-1b)。

include "root" {

path = find_in_parent_folders()

}

terraform {

source = "git@github.com:<name_or_org>/subnet.git"

}

dependency "vpc" {

config_path = "../vpc"

}

dependency "private-route-table" {

config_path = "../private-route-table"

}

inputs = {

subnets = [

{

name = "eks-demo-app-subnet-a"

vpc_id = dependency.vpc.outputs.vpc_id

cidr_block = "10.0.100.0/24"

availability_zone = "us-east-1a"

map_public_ip_on_launch = false

private_dns_hostname_type_on_launch = "resource-name"

is_public = false

route_table_id = dependency.private-route-table.outputs.route_table_ids[0]

tags = {}

},

{

name = "eks-demo-app-subnet-b"

vpc_id = dependency.vpc.outputs.vpc_id

cidr_block = "10.0.200.0/24"

availability_zone = "us-east-1b"

map_public_ip_on_launch = false

private_dns_hostname_type_on_launch = "resource-name"

is_public = false

route_table_id = dependency.private-route-table.outputs.route_table_ids[0]

tags = {}

},

{

name = "eks-demo-data-subnet-a"

vpc_id = dependency.vpc.outputs.vpc_id

cidr_block = "10.0.10.0/24"

availability_zone = "us-east-1a"

map_public_ip_on_launch = false

private_dns_hostname_type_on_launch = "resource-name"

is_public = false

route_table_id = dependency.private-route-table.outputs.route_table_ids[0]

tags = {}

},

{

name = "eks-demo-data-subnet-b"

vpc_id = dependency.vpc.outputs.vpc_id

cidr_block = "10.0.20.0/24"

availability_zone = "us-east-1b"

map_public_ip_on_launch = false

private_dns_hostname_type_on_launch = "resource-name"

is_public = false

route_table_id = dependency.private-route-table.outputs.route_table_ids[0]

tags = {}

}

]

}

j) infra-live/dev/nacl/terragrunt.hcl

该模块使用NACL 构建块作为其 Terraform 源来为我们的公共和私有子网创建 NACL。

为了简单起见,我们将配置非常宽松的 NACL 和安全组规则,但在下一篇博文中,我们将针对 VPC 和集群强制执行安全规则。

但请注意,数据子网的 NACL 仅允许来自应用程序子网 CIDR 的端口 5432 上的流量。

include "root" {

path = find_in_parent_folders()

}

terraform {

source = "git@github.com:<name_or_org>/nacl.git"

}

dependency "vpc" {

config_path = "../vpc"

}

dependency "public-subnets" {

config_path = "../public-subnets"

}

dependency "private-subnets" {

config_path = "../private-subnets"

}

inputs = {

_vpc_id = dependency.vpc.outputs.vpc_id

nacls = [

# Public NACL

{

name = "eks-demo-public-nacl"

vpc_id = dependency.vpc.outputs.vpc_id

egress = [

{

protocol = "-1"

rule_no = 500

action = "allow"

cidr_block = "0.0.0.0/0"

from_port = 0

to_port = 0

}

]

ingress = [

{

protocol = "-1"

rule_no = 100

action = "allow"

cidr_block = "0.0.0.0/0"

from_port = 0

to_port = 0

}

]

subnet_id = dependency.public-subnets.outputs.public_subnets[0]

tags = {}

},

# App NACL A

{

name = "eks-demo-nacl-a"

vpc_id = dependency.vpc.outputs.vpc_id

egress = [

{

protocol = "-1"

rule_no = 100

action = "allow"

cidr_block = "0.0.0.0/0"

from_port = 0

to_port = 0

}

]

ingress = [

{

protocol = "-1"

rule_no = 100

action = "allow"

cidr_block = "0.0.0.0/0"

from_port = 0

to_port = 0

}

]

subnet_id = dependency.private-subnets.outputs.private_subnets[0]

tags = {}

},

# App NACL B

{

name = "eks-demo-nacl-b"

vpc_id = dependency.vpc.outputs.vpc_id

egress = [

{

protocol = "-1"

rule_no = 100

action = "allow"

cidr_block = "0.0.0.0/0"

from_port = 0

to_port = 0

}

]

ingress = [

{

protocol = "-1"

rule_no = 100

action = "allow"

cidr_block = "0.0.0.0/0"

from_port = 0

to_port = 0

}

]

subnet_id = dependency.private-subnets.outputs.private_subnets[1]

tags = {}

},

# RDS NACL A

{

name = "eks-demo-rds-nacl-a"

vpc_id = dependency.vpc.outputs.vpc_id

egress = [

{

protocol = "tcp"

rule_no = 100

action = "allow"

cidr_block = "10.0.100.0/24"

from_port = 1024

to_port = 65535

},

{

protocol = "tcp"

rule_no = 200

action = "allow"

cidr_block = "10.0.200.0/24"

from_port = 1024

to_port = 65535

},

{

protocol = "tcp"

rule_no = 300

action = "allow"

cidr_block = "10.0.0.0/24"

from_port = 1024

to_port = 65535

}

]

ingress = [

{

protocol = "tcp"

rule_no = 100

action = "allow"

cidr_block = "10.0.100.0/24"

from_port = 5432

to_port = 5432

},

{

protocol = "tcp"

rule_no = 200

action = "allow"

cidr_block = "10.0.200.0/24"

from_port = 5432

to_port = 5432

},

{

protocol = "tcp"

rule_no = 300

action = "allow"

cidr_block = "10.0.0.0/24"

from_port = 5432

to_port = 5432

}

]

subnet_id = dependency.private-subnets.outputs.private_subnets[1]

tags = {}

},

# RDS NACL B

{

name = "eks-demo-rds-nacl-b"

vpc_id = dependency.vpc.outputs.vpc_id

egress = [

{

protocol = "tcp"

rule_no = 100

action = "allow"

cidr_block = "10.0.100.0/24"

from_port = 1024

to_port = 65535

},

{

protocol = "tcp"

rule_no = 200

action = "allow"

cidr_block = "10.0.200.0/24"

from_port = 1024

to_port = 65535

},

{

protocol = "tcp"

rule_no = 300

action = "allow"

cidr_block = "10.0.0.0/24"

from_port = 1024

to_port = 65535

}

]

ingress = [

{

protocol = "tcp"

rule_no = 100

action = "allow"

cidr_block = "10.0.100.0/24"

from_port = 5432

to_port = 5432

},

{

protocol = "tcp"

rule_no = 200

action = "allow"

cidr_block = "10.0.200.0/24"

from_port = 5432

to_port = 5432

},

{

protocol = "tcp"

rule_no = 300

action = "allow"

cidr_block = "10.0.0.0/24"

from_port = 5432

to_port = 5432

}

]

subnet_id = dependency.private-subnets.outputs.private_subnets[2]

tags = {}

}

]

}

k)infra-live/dev/security-group/terragrunt.hcl

该模块使用安全组构建块作为其 Terraform 源为我们的节点和堡垒主机创建安全组。

再次强调,它的规则将会非常宽松,但我们会在下一篇文章中纠正它。

include "root" {

path = find_in_parent_folders()

}

terraform {

source = "git@github.com:<name_or_org>/security-group.git"

}

dependency "vpc" {

config_path = "../vpc"

}

dependency "public-subnets" {

config_path = "../public-subnets"

}

dependency "private-subnets" {

config_path = "../private-subnets"

}

inputs = {

vpc_id = dependency.vpc.outputs.vpc_id

name = "public-sg"

description = "Open security group"

ingress_rules = [

{

protocol = "-1"

from_port = 0

to_port = 0

cidr_blocks = ["0.0.0.0/0"]

}

]

egress_rules = [

{

protocol = "-1"

from_port = 0

to_port = 0

cidr_blocks = ["0.0.0.0/0"]

}

]

tags = {}

}

l)infra-live/dev/bastion-role/terragrunt.hcl

该模块使用IAM 角色构建块作为其 Terraform 源来创建一个 IAM 角色,该角色具有我们的堡垒主机执行 EKS 操作所需的权限并由 Systems Manager 管理。

include "root" {

path = find_in_parent_folders()

}

terraform {

source = "git@github.com:<name_or_org>/iam-role.git"

}

inputs = {

principals = [

{

type = "Service"

identifiers = ["ec2.amazonaws.com"]

}

]

policy_name = "EKSDemoBastionPolicy"

policy_attachments = [

{

arn = "arn:aws:iam::534876755051:policy/AmazonEKSFullAccessPolicy"

},

{

arn = "arn:aws:iam::aws:policy/AmazonSSMManagedInstanceCore"

}

]

policy_statements = []

role_name = "EKSDemoBastionRole"

}

m) infra-live/dev/bastion-instance-profile/terragrunt.hcl

此模块使用 *实例配置文件构建块作为其 Terraform 源,为我们的堡垒主机创建 IAM 实例配置文件。上一步中创建的 IAM 角色已附加到此实例配置文件。

include "root" {

path = find_in_parent_folders()

}

terraform {

source = "git@github.com:<name_or_org>/instance-profile.git"

}

dependency "iam-role" {

config_path = "../bastion-role"

}

inputs = {

instance_profile_name = "EKSBastionInstanceProfile"

path = "/"

iam_role_name = dependency.iam-role.outputs.role_name

instance_profile_tags = {}

}

n)infra-live/dev/bastion-ec2/terragrunt.hcl

该模块使用EC2 构建块作为其 Terraform 源来创建一个 EC2 实例,我们将使用它作为跳转箱(或堡垒主机)来管理我们的 EKS 集群中的工作节点。

堡垒主机将被放置在我们的公共子网中,并将附加我们在上一步中创建的实例配置文件以及我们的松散安全组。

它是一个 t2.micro 类型的 Linux 实例,使用 Amazon Linux 2023 AMI,并配置了用户数据脚本。此脚本将在下一步中定义。

include "root" {

path = find_in_parent_folders()

}

terraform {

source = "git@github.com:<name_or_org>/ec2.git"

}

dependency "public-subnets" {

config_path = "../public-subnets"

}

dependency "instance-profile" {

config_path = "../bastion-instance-profile"

}

dependency "security-group" {

config_path = "../security-group"

}

inputs = {

instance_name = "eks-bastion-host"

use_instance_profile = true

instance_profile_name = dependency.instance-profile.outputs.name

most_recent_ami = true

owners = ["amazon"]

ami_name_filter = "name"

ami_values_filter = ["al2023-ami-2023.*-x86_64"]

instance_type = "t2.micro"

subnet_id = dependency.public-subnets.outputs.public_subnets[0]

existing_security_group_ids = [dependency.security-group.outputs.security_group_id]

assign_public_ip = true

uses_ssh = false

keypair_name = ""

use_userdata = true

userdata_script_path = "user-data.sh"

user_data_replace_on_change = true

extra_tags = {}

}

o)infra-live/dev/bastion-ec2/user-data.sh

此用户数据脚本将安装 AWS CLI 以及kubectl和eksctl工具。它还为该实用程序配置别名kubectl( k) 以及 bash 补全。

#!/bin/bash

# Become root user

sudo su - ec2-user

# Update software packages

sudo yum update -y

# Download AWS CLI package

curl "https://awscli.amazonaws.com/awscli-exe-linux-x86_64.zip" -o "awscliv.zip"

# Unzip file

unzip -q awscli.zip

# Install AWS CLI

./aws/install

# Check AWS CLI version

aws —version

# Download kubectl binary

sudo curl -LO "https://dl.k8s.io/release/$(curl -L -s https://dl.k8s.io/release/stable.txt)/bin/linux/amd64/kubectl"

# Give the binary executable permissions

sudo chmod +x ./kubectl

# Move binary to directory in system’s path

sudo mv kubectl /usr/local/bin/

export PATH=/usr/local/bin:$PATH

# Check kubectl version

kubectl version -—client

# Installing kubectl bash completion on Linux

## If bash-completion is not installed on Linux, install the 'bash-completion' package

## via your distribution's package manager.

## Load the kubectl completion code for bash into the current shell

echo 'source <(kubectl completion bash)' >>~/.bash_profile

## Write bash completion code to a file and source it from .bash_profile

# kubectl completion bash > ~/.kube/completion.bash.inc

# printf "

# # kubectl shell completion

# source '$HOME/.kube/completion.bash.inc'

# " >> $HOME/.bash_profile

# source $HOME/.bash_profile

# Set bash completion for kubectl alias (k)

echo 'alias k=kubectl' >>~/.bashrc

echo 'complete -o default -F __start_kubectl k' >>~/.bashrc

source ~/.bashrc

# Get platform

ARCH=amd64

PLATFORM=$(uname -s)_$ARCH

# Download eksctl tool for platform

curl -sLO "https://github.com/eksctl-io/eksctl/releases/latest/download/eksctl_$PLATFORM.tar.gz"

# (Optional) Verify checksum

curl -sL "https://github.com/eksctl-io/eksctl/releases/latest/download/eksctl_checksums.txt" | grep $PLATFORM | sha256sum --check

# Extract binary

tar -xzf eksctl_$PLATFORM.tar.gz -C /tmp && rm eksctl_$PLATFORM.tar.gz

# Move binary to directory in system’s path

sudo mv /tmp/eksctl /usr/local/bin

# Check eksctl version

eksctl version

# Enable eksctl bash completion

. <(eksctl completion bash)

# Update system

sudo yum update -y

# Install Docker

sudo yum install docker -y

# Start Docker

sudo service docker start

# Add ec2-user to docker group

sudo usermod -a -G docker ec2-user

# Create docker group

newgrp docker

# Ensure docker is on

sudo chkconfig docker on

p) infra-live/dev/eks-cluster-role/terragrunt.hcl

此模块使用IAM 角色构建块作为其 Terraform 源,为 EKS 集群创建 IAM 角色。该角色附加了托管策略 AmazonEKSClusterPolicy。

include "root" {

path = find_in_parent_folders()

}

terraform {

source = "git@github.com:<name_or_org>/iam-role.git"

}

inputs = {

principals = [

{

type = "Service"

identifiers = ["eks.amazonaws.com"]

}

]

policy_name = "EKSDemoClusterRolePolicy"

policy_attachments = [

{

arn = "arn:aws:iam::aws:policy/AmazonEKSClusterPolicy"

}

]

policy_statements = []

role_name = "EKSDemoClusterRole"

}

q) infra-live/dev/eks-cluster/terragrunt.hcl

该模块使用EKS 集群构建块作为其 Terraform 源来创建使用上一步中创建的 IAM 角色的 EKS 集群。

集群将在我们创建的私有子网中配置 ENI(弹性网络接口),供 EKS 工作节点使用。

该集群还启用了各种集群日志类型以用于审计目的。

include "root" {

path = find_in_parent_folders()

}

terraform {

source = "git@github.com:gozem-test/eks-cluster.git"

}

dependency "private-subnets" {

config_path = "../private-subnets"

}

dependency "iam-role" {

config_path = "../eks-cluster-role"

}

inputs = {

name = "eks-demo"

subnet_ids = [dependency.private-subnets.outputs.private_subnets[0], dependency.private-subnets.outputs.private_subnets[1]]

cluster_role_arn = dependency.iam-role.outputs.role_arn

enabled_cluster_log_types = ["api", "audit", "authenticator", "controllerManager", "scheduler"]

}

r) infra-live/dev/eks-addons/terragrunt.hcl

该模块使用EKS Add-ons 构建块作为其 Terraform 源来激活我们的 EKS 集群的附加组件。

这一点非常重要,因为这些附加组件可以帮助使用 VPC 功能 ( vpc-cni) 在 AWS VPC 内进行联网、集群域名解析 ( coredns)、维护集群中服务和 pod 之间的网络连接 ( kube-proxy)、管理集群中的 IAM 凭证 ( eks-pod-identity-agent) 或允许 EKS 管理 EBS 卷的生命周期 ( aws-ebs-csi-driver)。

include "root" {

path = find_in_parent_folders()

}

terraform {

source = "git@github.com:<name_or_org>/eks-addon.git"

}

dependency "cluster" {

config_path = "../eks-cluster"

}

inputs = {

cluster_name = dependency.cluster.outputs.name

addons = [

{

name = "vpc-cni"

version = "v1.18.0-eksbuild.1"

},

{

name = "coredns"

version = "v1.11.1-eksbuild.6"

},

{

name = "kube-proxy"

version = "v1.29.1-eksbuild.2"

},

{

name = "aws-ebs-csi-driver"

version = "v1.29.1-eksbuild.1"

},

{

name = "eks-pod-identity-agent"

version = "v1.2.0-eksbuild.1"

}

]

}

s) infra-live/dev/worker-node-role/terragrunt.hcl

该模块使用IAM 角色构建块作为其 Terraform 源来为 EKS 工作节点创建 IAM 角色。

此角色授予节点组在集群内执行操作的权限,并授予其节点由 Systems Manager 管理的权限。

include "root" {

path = find_in_parent_folders()

}

terraform {

source = "git@github.com:<name_or_org>/iam-role.git"

}

inputs = {

principals = [

{

type = "Service"

identifiers = ["ec2.amazonaws.com"]

}

]

policy_name = "EKSDemoWorkerNodePolicy"

policy_attachments = [

{

arn = "arn:aws:iam::aws:policy/AmazonEC2ContainerRegistryReadOnly"

},

{

arn = "arn:aws:iam::aws:policy/AmazonEKS_CNI_Policy"

},

{

arn = "arn:aws:iam::aws:policy/AmazonEKSWorkerNodePolicy"

},

{

arn = "arn:aws:iam::aws:policy/AmazonSSMManagedInstanceCore"

},

{

arn = "arn:aws:iam::aws:policy/AmazonEKSVPCResourceController"

}

]

policy_statements = []

role_name = "EKSDemoWorkerNodeRole"

}

t) infra-live/dev/eks-node-group/terragrunt.hcl

该模块使用EKS 节点组构建块作为其 Terraform 源在集群中创建节点组。

节点组中的节点将在 VPC 的私有子网中预置,我们将使用 类型的按需 Linux 实例,m5.4xlarge其AL2_x86_64AMI 和磁盘大小为20GB。我们使用m5.4xlarge实例是因为它支持中继,在下一篇文章中,我们将需要它来部署 Pod 并将安全组与其关联。

include "root" {

path = find_in_parent_folders()

}

terraform {

source = "git@github.com:<name_or_org>/eks-node-group.git"

}

dependency "cluster" {

config_path = "../eks-cluster"

}

dependency "iam-role" {

config_path = "../worker-node-role"

}

dependency "private-subnets" {

config_path = "../private-subnets"

}

inputs = {

cluster_name = dependency.cluster.outputs.name

node_role_arn = dependency.iam-role.outputs.role_arn

node_group_name = "eks-demo-node-group"

scaling_config = {

desired_size = 2

max_size = 4

min_size = 1

}

subnet_ids = [dependency.private-subnets.outputs.private_subnets[0], dependency.private-subnets.outputs.private_subnets[1]]

update_config = {

max_unavailable_percentage = 50

}

ami_type = "AL2_x86_64"

capacity_type = "ON_DEMAND"

disk_size = 20

instance_types = ["m5.4xlarge"]

}

u) infra-live/dev/eks-pod-iam/terragrunt.hcl

该模块使用IAM OIDC 构建块作为其 Terraform 源来创建资源,允许 pod 承担 IAM 角色并与其他 AWS 服务通信。

include "root" {

path = find_in_parent_folders()

}

terraform {

source = "git@github.com:<name_or_org>/iam-oidc.git"

}

dependency "cluster" {

config_path = "../eks-cluster"

}

inputs = {

role_name = "EKSDemoPodIAMAuth"

oidc_issuer = dependency.cluster.outputs.oidc_tls_issuer

client_id_list = ["sts.amazonaws.com"]

}

完成所有这些后,我们现在需要为 Terragrunt 代码创建一个 GitHub 存储库,并将代码推送到该存储库。我们还应该为我们的 AWS 凭证(AWS_ACCESS_KEY_ID、AWS_SECRET_ACCESS_KEY、AWS_REGION)配置存储库机密,以及一个 SSH 私钥,我们将使用该私钥通过 Terraform 构建块访问存储库。

完成后,我们可以继续创建 GitHub Actions 工作流程来自动化我们基础设施的配置。

3. 创建 GitHub Actions 工作流程以实现自动化基础设施配置

现在我们的代码已经版本化了,我们可以编写一个工作流,每当我们将代码推送到主分支(可以使用任何你喜欢的分支,例如 master)时,

该工作流就会触发。理想情况下,此工作流应该仅在拉取请求被批准合并到主分支后触发,但为了便于说明,我们将尽量简化。

首先需要.github/workflows在项目根目录中创建一个。然后,您可以在此目录中创建一个名为deploy.ymlinfra-live的YAML 文件。infra-live/.github/workflows

我们将把以下代码添加到我们的infra-live/.github/workflows/configure.yml文件中来处理基础设施的配置:

name: Deploy

on:

push:

branches:

- main

pull_request:

branches:

- main

jobs:

terraform:

runs-on: ubuntu-latest

steps:

- name: Checkout repository

uses: actions/checkout@v2

- name: Setup SSH

uses: webfactory/ssh-agent@v0.4.1

with:

ssh-private-key: ${{ secrets.SSH_PRIVATE_KEY }}

- name: Setup Terraform

uses: hashicorp/setup-terraform@v2

with:

terraform_version: 1.5.5

terraform_wrapper: false

- name: Setup Terragrunt

run: |

curl -LO "https://github.com/gruntwork-io/terragrunt/releases/download/v0.48.1/terragrunt_linux_amd64"

chmod +x terragrunt_linux_amd64

sudo mv terragrunt_linux_amd64 /usr/local/bin/terragrunt

terragrunt -v

- name: Apply Terraform changes

run: |

cd dev

terragrunt run-all apply -auto-approve --terragrunt-non-interactive -var AWS_ACCESS_KEY_ID=$AWS_ACCESS_KEY_ID -var AWS_SECRET_ACCESS_KEY=$AWS_SECRET_ACCESS_KEY -var AWS_REGION=$AWS_DEFAULT_REGION

cd bastion-ec2

ip=$(terragrunt output instance_public_ip)

echo "$ip"

echo "$ip" > public_ip.txt

cat public_ip.txt

pwd

env:

AWS_ACCESS_KEY_ID: ${{ secrets.AWS_ACCESS_KEY_ID }}

AWS_SECRET_ACCESS_KEY: ${{ secrets.AWS_SECRET_ACCESS_KEY }}

AWS_DEFAULT_REGION: ${{ secrets.AWS_DEFAULT_REGION }}

让我们分析一下这个文件的作用:

a)该name: Deploy行将我们的工作流程命名为 Deploy

b) 以下代码行告诉 GitHub 每当代码被推送到主分支或拉取请求合并到主分支时触发此工作流程:

on:

push:

branches:

- main

pull_request:

branches:

- main

c) 然后,我们使用以下代码行定义名为terraform的作业,告诉 GitHub 使用在最新版本的 Ubuntu 上运行的运行器。可以将运行器视为为我们执行此工作流文件中命令的 GitHub 服务器:

jobs:

terraform:

runs-on: ubuntu-latest

d) 然后,我们定义一系列将按顺序执行的步骤或命令块。

第一步使用 GitHub 操作将我们的 infra-live 仓库签出到运行器中,以便我们开始使用它:

- name: Checkout repository

uses: actions/checkout@v2

下一步使用另一个 GitHub 操作来帮助我们使用定义为存储库机密的私钥在 GitHub 运行器上轻松设置 SSH:

- name: Setup SSH

uses: webfactory/ssh-agent@v0.4.1

with:

ssh-private-key: ${{ secrets.SSH_PRIVATE_KEY }}

以下步骤使用另一个 GitHub 操作来帮助我们在 GitHub 运行器上轻松安装 Terraform,并指定我们需要的确切版本:

- name: Setup Terraform

uses: hashicorp/setup-terraform@v2

with:

terraform_version: 1.5.5

terraform_wrapper: false

然后我们使用另一个步骤来执行一系列在 GitHub 运行器上安装 Terragrunt 的命令。我们使用该命令terragrunt -v检查已安装的 Terragrunt 版本并确认安装成功:

- name: Setup Terragrunt

run: |

curl -LO "https://github.com/gruntwork-io/terragrunt/releases/download/v0.48.1/terragrunt_linux_amd64"

chmod +x terragrunt_linux_amd64

sudo mv terragrunt_linux_amd64 /usr/local/bin/terragrunt

terragrunt -v

最后,我们使用一个步骤来应用我们的 Terraform 更改,然后我们使用一系列命令来检索我们所配置的 EC2 实例的公共 IP 地址并将其保存到名为public_ip.txt的文件中:

- name: Apply Terraform changes

run: |

cd dev

terragrunt run-all apply -auto-approve --terragrunt-non-interactive -var AWS_ACCESS_KEY_ID=$AWS_ACCESS_KEY_ID -var AWS_SECRET_ACCESS_KEY=$AWS_SECRET_ACCESS_KEY -var AWS_REGION=$AWS_DEFAULT_REGION

cd bastion-ec2

ip=$(terragrunt output instance_public_ip)

echo "$ip"

echo "$ip" > public_ip.txt

cat public_ip.txt

pwd

env:

AWS_ACCESS_KEY_ID: ${{ secrets.AWS_ACCESS_KEY_ID }}

AWS_SECRET_ACCESS_KEY: ${{ secrets.AWS_SECRET_ACCESS_KEY }}

AWS_DEFAULT_REGION: ${{ secrets.AWS_DEFAULT_REGION }}

就这样!现在,我们可以观察到将代码推送到主分支时触发的管道,以及 EKS 集群的配置过程。

在下一篇文章中,我们将保护我们的集群,然后访问我们的堡垒主机,并亲自体验真正的 Kubernetes 操作!

希望你喜欢这篇文章。如果你有任何问题或意见,欢迎在下方留言。

再见!

鏂囩珷鏉ユ簮锛�https://dev.to/aws-builders/provision-eks-cluster-with-terraform-terragrunt-github-actions-1c64 后端开发教程 - Java、Spring Boot 实战 - msg200.com

后端开发教程 - Java、Spring Boot 实战 - msg200.com